Prometheus介绍

随着容器技术的迅速发展,Kubernetes 已然成为大家追捧的容器集群管理系统。Prometheus 作为生态圈 Cloud Native Computing Foundation(简称:CNCF)中的重要一员,其活跃度仅次于 Kubernetes, 现已广泛用于 Kubernetes 集群的监控系统中。

本文将简要介绍 Prometheus 的组成和相关概念,并实例演示 Prometheus 的安装,配置及使用。

Prometheus的特点:

- 多维度数据模型。

- 灵活的查询语言。

- 不依赖分布式存储,单个服务器节点是自主的。

- 通过基于HTTP的pull方式采集时序数据。

- 可以通过中间网关进行时序列数据推送。

- 通过服务发现或者静态配置来发现目标服务对象。

- 支持多种多样的图表和界面展示,比如Grafana等

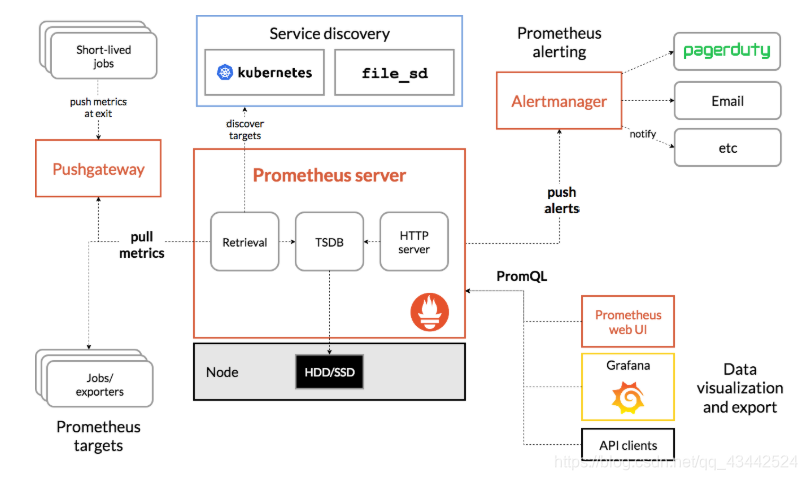

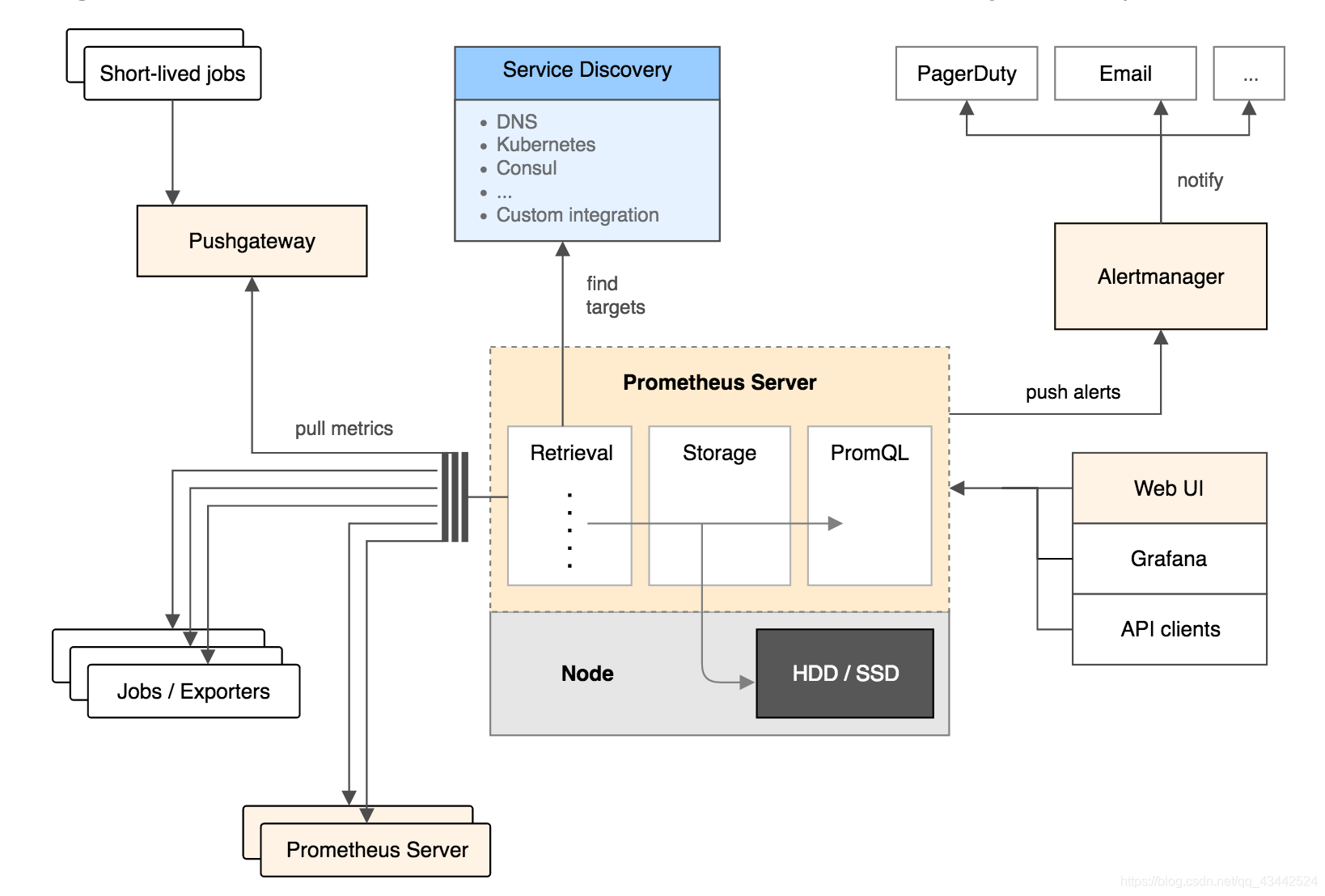

官方架构图

官方网站:https://prometheus.io/

Prometheus 生态圈中包含了多个组件,其中许多组件是可选的:

- Prometheus Server: 用于收集和存储时间序列数据。

- Client Library: 客户端库,为需要监控的服务生成相应的 metrics 并暴露给 Prometheus server。当 Prometheus server 来 pull 时,直接返回实时状态的 metrics。

- Push Gateway: 主要用于短期的 jobs。由于这类 jobs 存在时间较短,可能在 Prometheus 来 pull 之前就消失了。为此,这次 jobs 可以直接向 Prometheus server 端推送它们的 metrics。这种方式主要用于服务层面的 metrics,对于机器层面的 metrices,需要使用 node exporter。

- Exporters: 用于暴露已有的第三方服务的 metrics 给 Prometheus。

- Alertmanager: 从 Prometheus server 端接收到 alerts 后,会进行去除重复数据,分组,并路由到对收的接受方式,发出报警。常见的接收方式有:电子邮件,pagerduty,OpsGenie, webhook 等一些其他的工具。

Prometheus的基本原理

Prometheus的基本原理是通过HTTP协议周期性抓取被监控组件的状态,任意组件只要提供对应的HTTP接口就可以接入监控。不需要任何SDK或者其他的集成过程。这样做非常适合做虚拟化环境监控系统,比如VM、Docker、Kubernetes等。输出被监控组件信息的HTTP接口被叫做exporter 。目前互联网公司常用的组件大部分都有exporter可以直接使用,比如Varnish、Haproxy、Nginx、MySQL、Linux系统信息(包括磁盘、内存、CPU、网络等等)。

Prometheus部署

1. 修改 grafana-service.yaml 文件

使用git下载Prometheus项目1

2

3

4

5

6

7

8

9

10

11

12[root@k8s-master01 plugin]# mkdir prometheus

[root@k8s-master01 plugin]# cd prometheus/

[root@k8s-master01 prometheus]# git clone https://github.com/coreos/kube-prometheus.git

正克隆到 'kube-prometheus'...

remote: Enumerating objects: 4, done.

remote: Counting objects: 100% (4/4), done.

remote: Compressing objects: 100% (4/4), done.

remote: Total 8171 (delta 0), reused 1 (delta 0), pack-reused 8167

接收对象中: 100% (8171/8171), 4.56 MiB | 57.00 KiB/s, done.

处理 delta 中: 100% (4936/4936), done.

[root@k8s-master01 prometheus]# cd kube-prometheus/manifests/

[root@k8s-master01 manifests]# vim grafana-service.yaml

使用 nodepode 方式访问 grafana:1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16apiVersion: v1

kind: Service

metadata:

labels:

app: grafana

name: grafana

namespace: monitoring

spec:

type: NodePort # 添加

ports:

- name: http

port: 3000

targetPort: http

nodePort: 30100 # 添加

selector:

app: grafana

2. 修改 修改 prometheus-service.yaml

1 | apiVersion: v1 |

3. 修改alertmanager-service.yaml

1 | apiVersion: v1 |

4. kubectl apply 部署

进入目录kube-prometheus执行kubectl apply -f manifests/

报错1

2

3

4

5

6

7

8

9

10

11

12

13

14unable to recognize "../manifests/alertmanager-alertmanager.yaml": no matches for kind "Alertmanager" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/alertmanager-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/grafana-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/kube-state-metrics-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/node-exporter-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-operator-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-prometheus.yaml": no matches for kind "Prometheus" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-rules.yaml": no matches for kind "PrometheusRule" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-serviceMonitor.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-serviceMonitorApiserver.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-serviceMonitorCoreDNS.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-serviceMonitorKubeControllerManager.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-serviceMonitorKubeScheduler.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

unable to recognize "../manifests/prometheus-serviceMonitorKubelet.yaml": no matches for kind "ServiceMonitor" in version "monitoring.coreos.com/v1"

创建成功后查看1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23[root@k8s-master01 manifests]# kubectl get svc -n monitoring

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main NodePort 10.102.129.38 <none> 9093:30300/TCP 15s

alertmanager-operated ClusterIP None <none> 9093/TCP,6783/TCP 8s

grafana NodePort 10.103.207.222 <none> 3000:30100/TCP 14s

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 14s

node-exporter ClusterIP None <none> 9100/TCP 14s

prometheus-adapter ClusterIP 10.104.146.228 <none> 443/TCP 13s

prometheus-k8s NodePort 10.100.247.74 <none> 9090:30200/TCP 12s

prometheus-operator ClusterIP None <none> 8080/TCP 15s

[root@k8s-master01 manifests]# kubectl get pods -n monitoring

NAME READY STATUS RESTARTS AGE

alertmanager-main-0 2/2 Running 1 111s

grafana-7dc5f8f9f6-r9w78 1/1 Running 0 117s

kube-state-metrics-5cbd67455c-q5hlh 4/4 Running 0 97s

node-exporter-5bjhk 2/2 Running 0 116s

node-exporter-n84tr 2/2 Running 0 115s

node-exporter-xbz84 2/2 Running 0 115s

prometheus-adapter-668748ddbd-c9ws6 1/1 Running 0 115s

prometheus-k8s-0 3/3 Running 1 101s

prometheus-k8s-1 3/3 Running 1 101s

prometheus-operator-7447bf4dcb-jfmsn 1/1 Running 0 117s

[root@k8s-master01 manifests]#

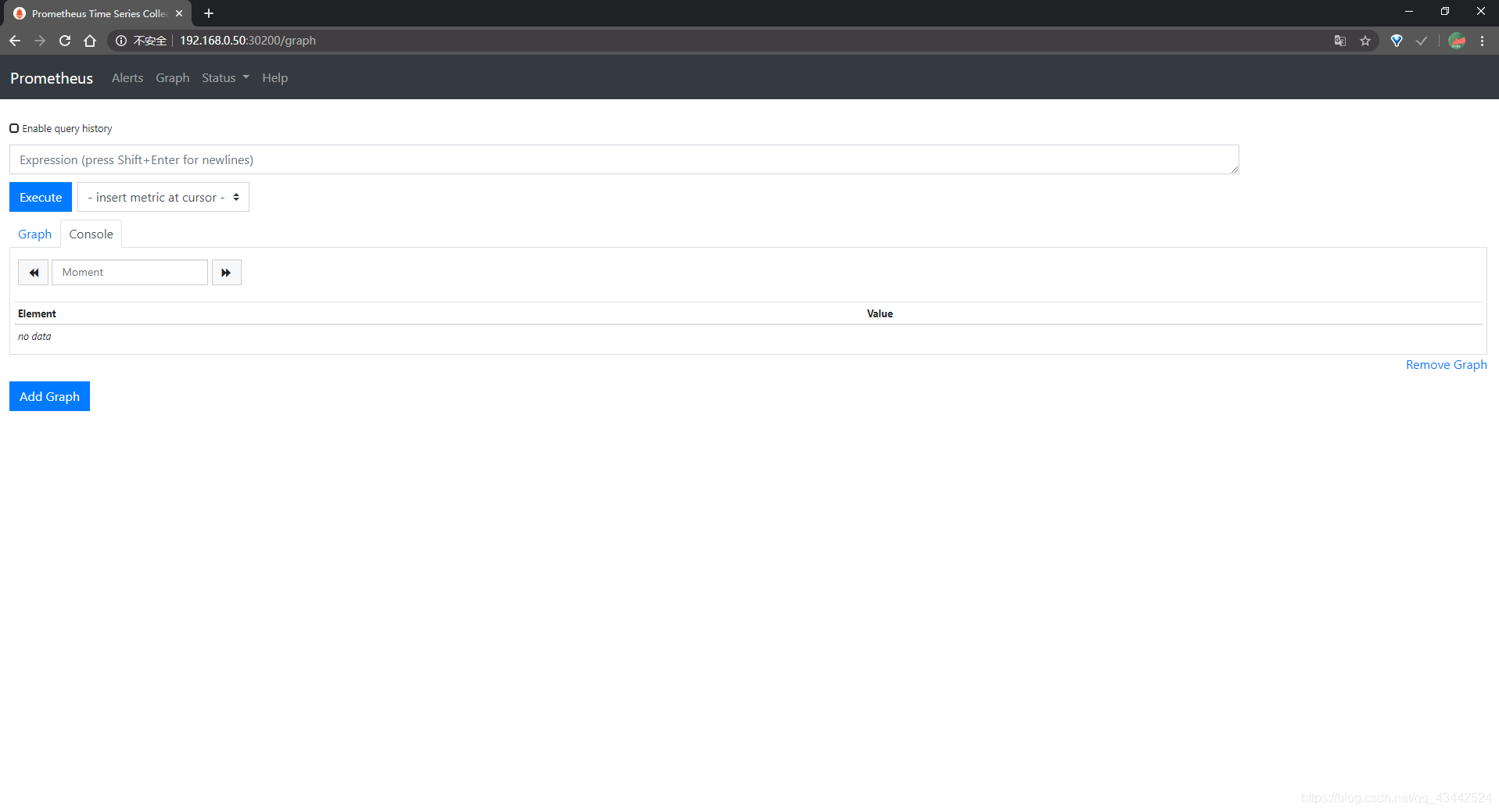

访问 prometheusprometheus

对应的 nodeport 端口为 30200,访问http://MasterIP:30200

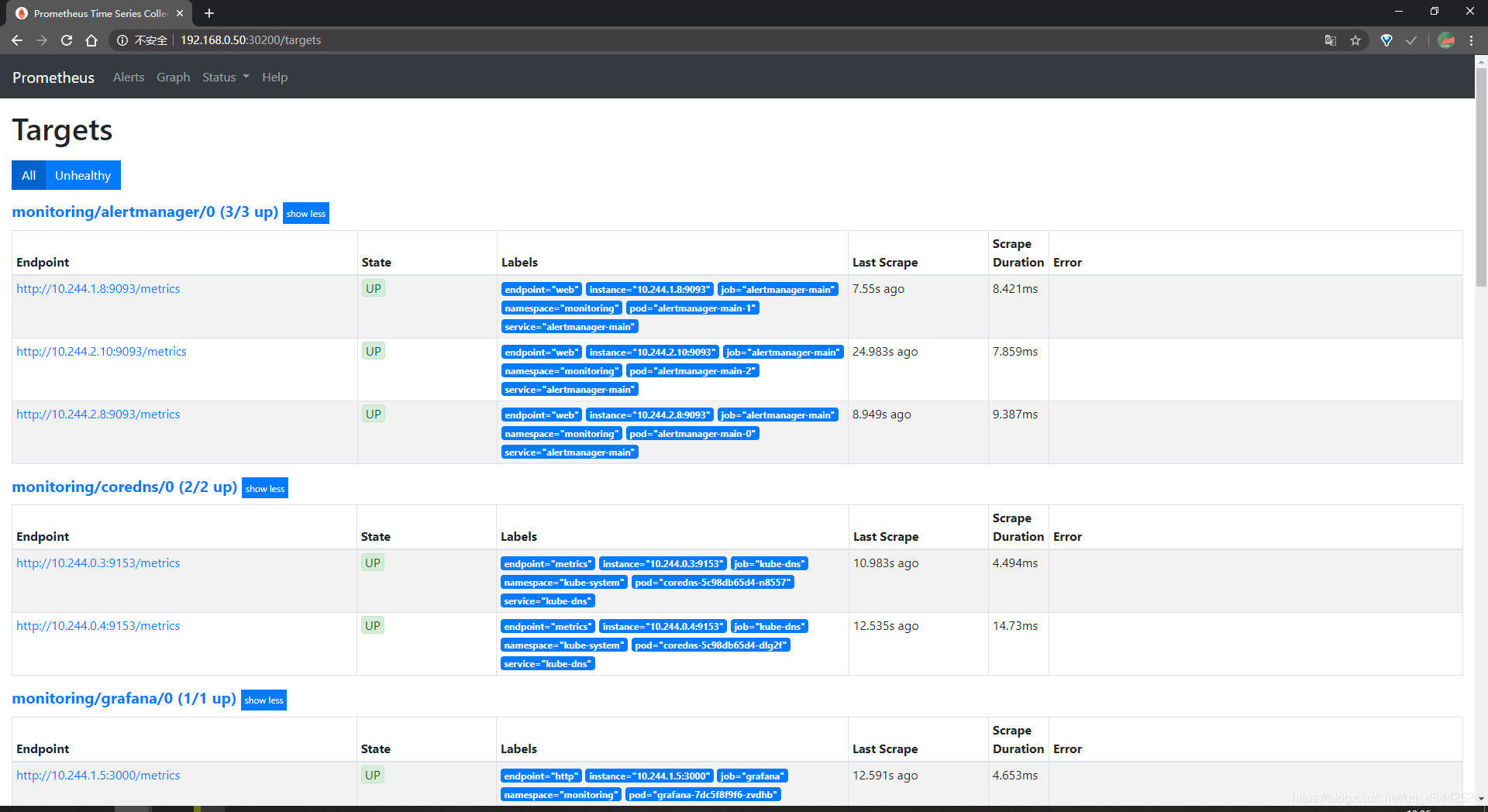

通过访问http://MasterIP:30200/target可以看到 prometheus 已经成功连接上了 k8s 的 apiserver

节点全部健康

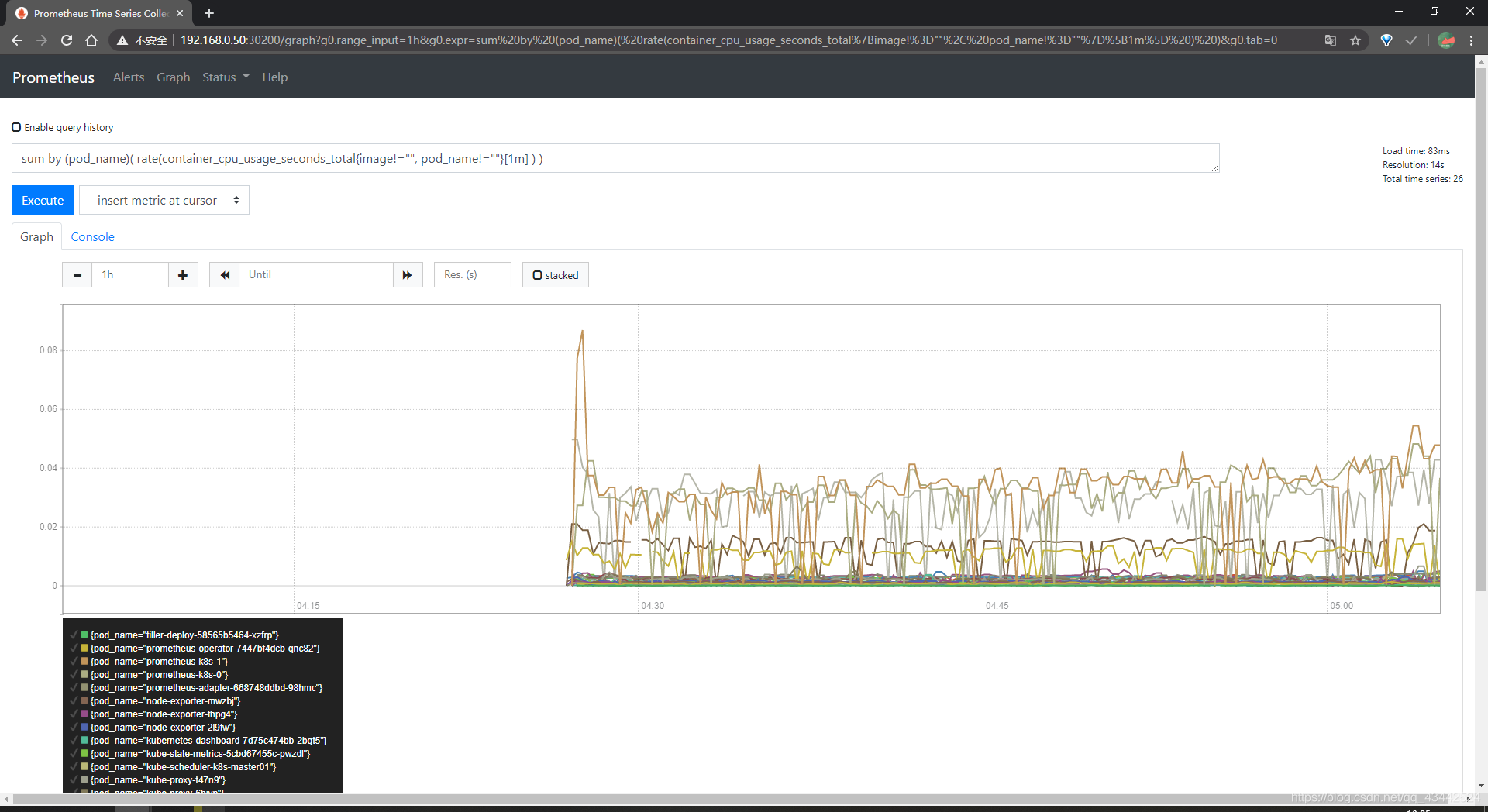

prometheus 的 WEB 界面上提供了基本的查询 K8S 集群中每个 POD 的 CPU 使用情况sum by (pod_name)( rate(container_cpu_usage_seconds_total{image!="", pod_name!=""}[1m] ) )

上述的查询有出现数据,说明 node-exporter 往 prometheus 中写入数据正常

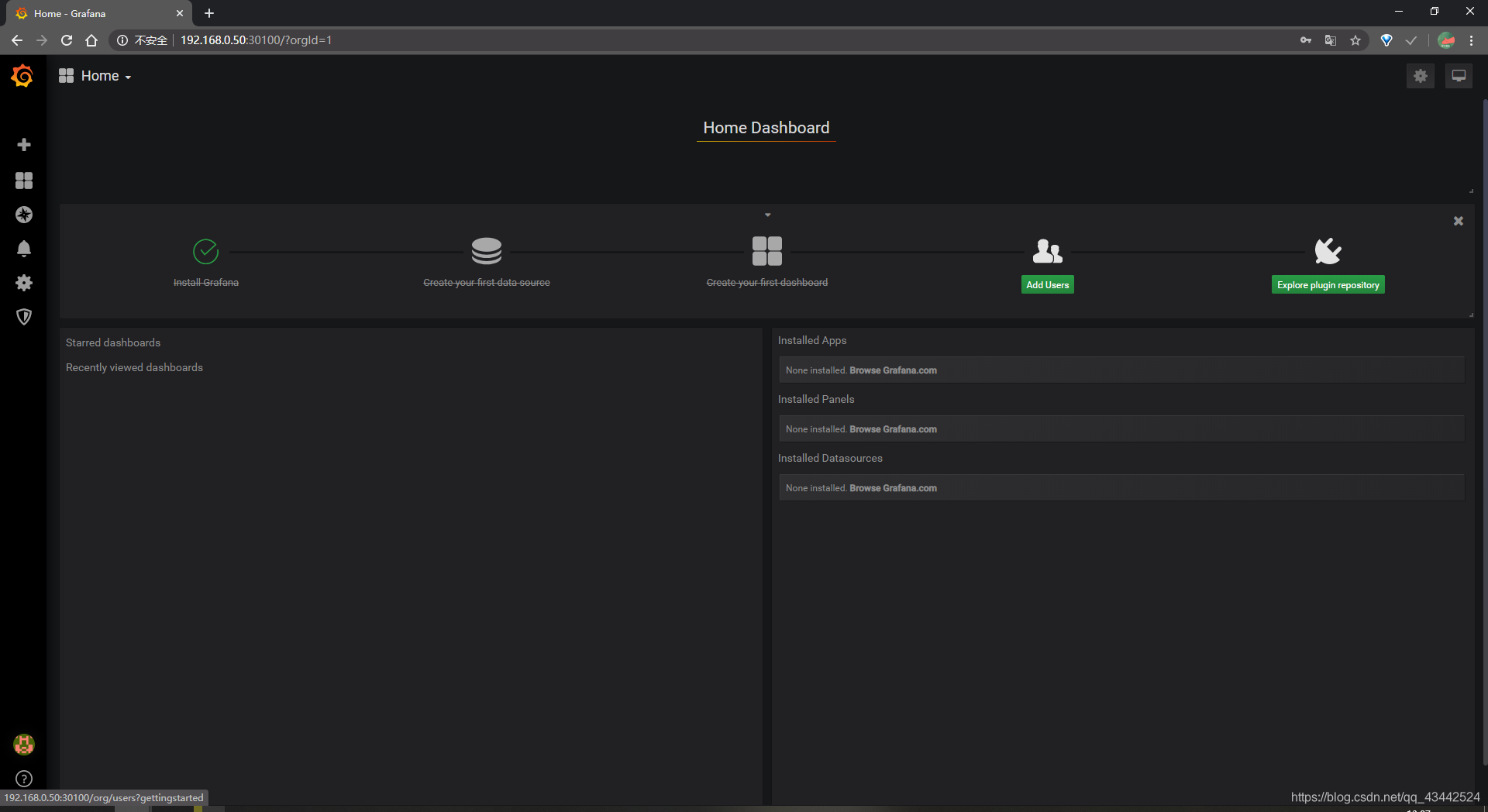

访问 grafana查看

grafana 服务暴露的端口号:1

2kubectl getservice-n monitoring | grep grafana

grafana NodePort 10.107.56.143 <none> 3000:30100/TCP

浏览器访问http://MasterIP:30100

用户名密码默认 admin/admin

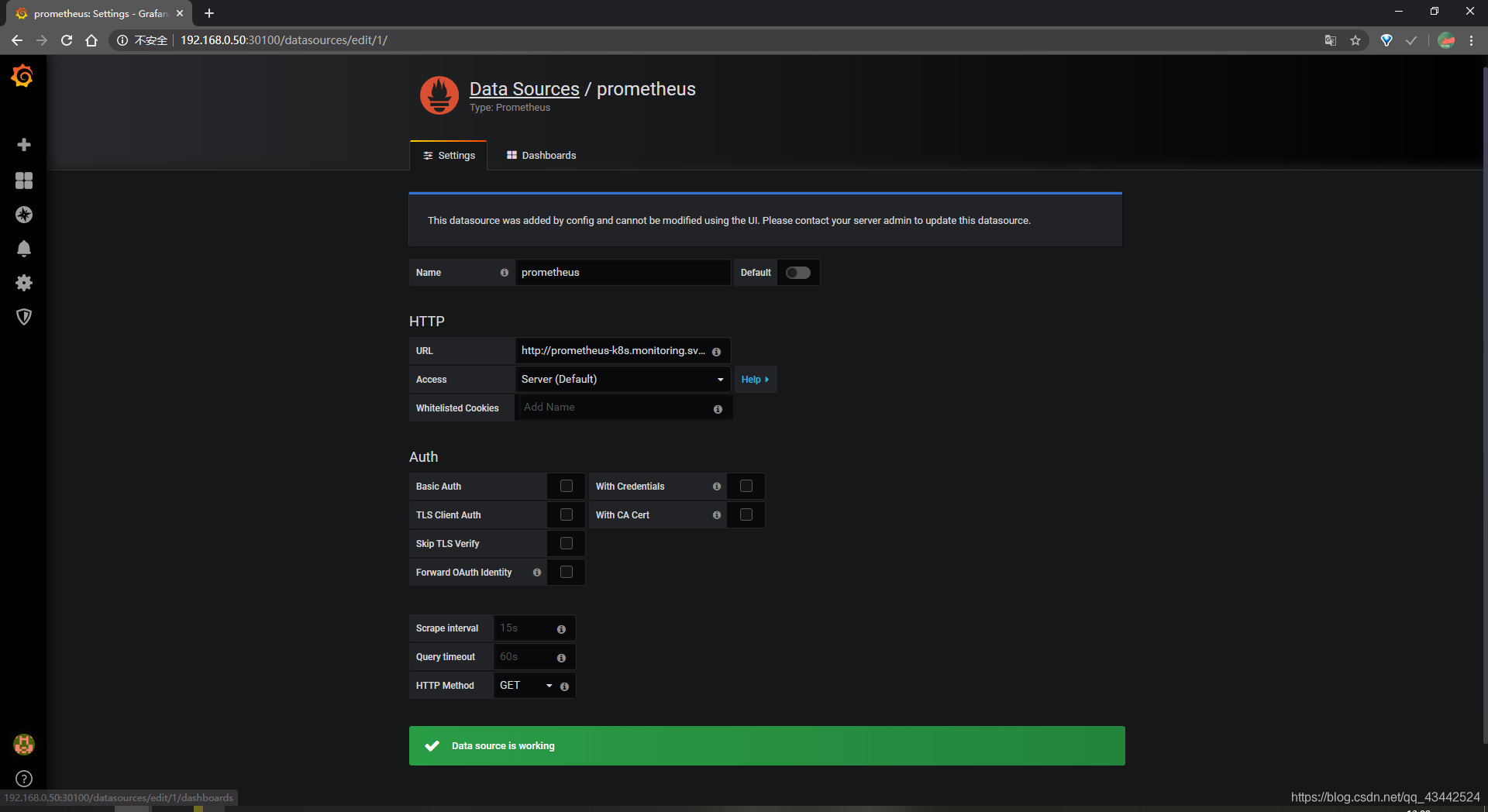

查看Kubernetes API server的数据